Part1: Implementing a RAG chatbot with Vector Search, BGE, langchain and llama2 on Databricks

Part1: Data ingestion and chunks preparation for embeddings

A step-by-step guide to implementing your own RAG+GenAI app on Databricks

In this new series of post, we will expose how to create your GenAI application on Databricks, step by step.

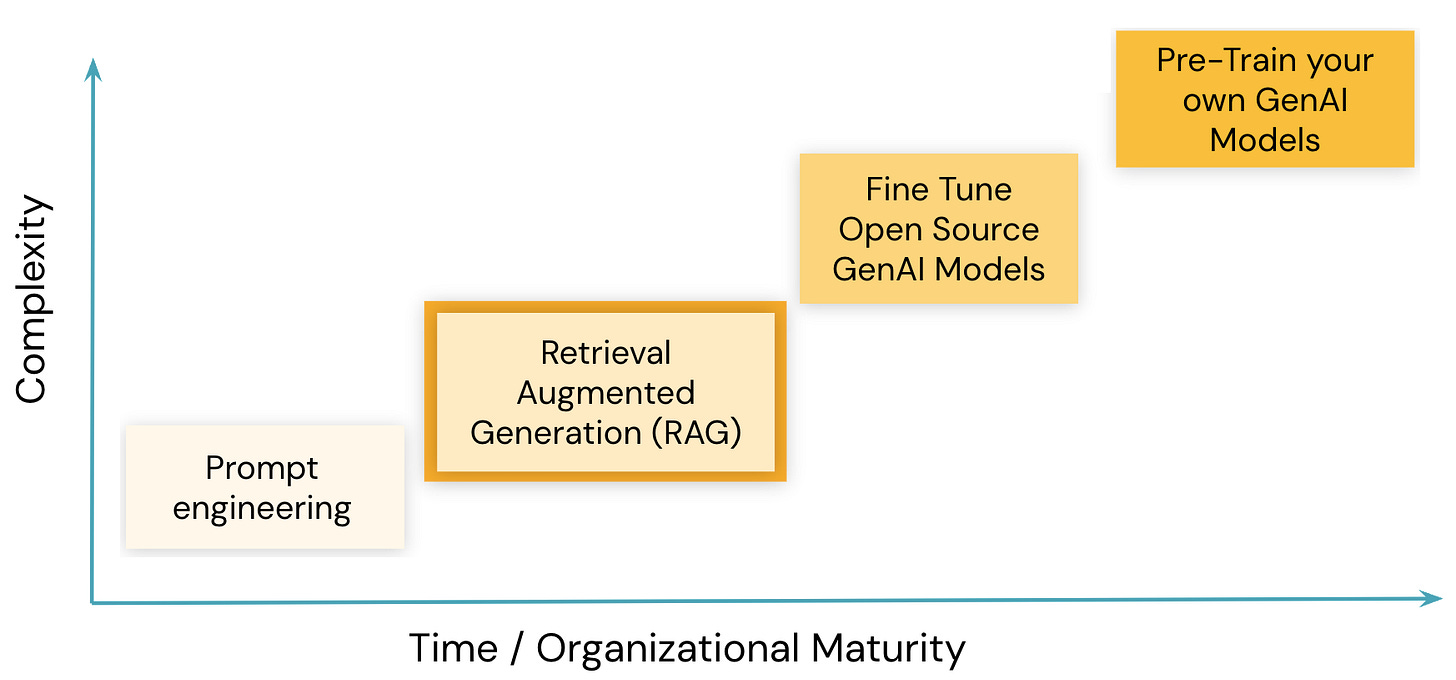

What is Retrieval Augmented Generation (RAG) for LLMs?

RAG is a powerful and efficient GenAI technique that allows you to improve model performance by leveraging your own data (e.g., documentation specific to your business), without the need to fine-tune the model.

This is done by providing your custom information as context to the LLM. In other words, we augment the prompt (user questions) by adding documents related to the question. This reduces hallucination and allows the LLM to produce results that provide company-specific data, without making any changes to the original LLM.

RAG has shown success in chatbots and Q&A systems that need to maintain up-to-date information or access domain-specific knowledge.

Databricks just launched a full suite of RAG tools to help enterprises build production-grade GenAI applications. Let’s discover deep dive & create our first GenAI app.

Want to try this yourself? The full demo with code details is available!

Implementing your own Databricks Assistant

We will show you how to build and deploy your custom chatbot, answering questions on any custom or private information. This first post focuses on Data Preparation.

We will specialize our chatbot to answer questions over Databricks, feeding databricks.com documentation articles to the model for accurate answers.

Here is the flow we will implement:

Part 1: Data preparation and chunk creation

Building our knowledge base

Preparing high-quality data is key for your chatbot performance. While this step might seem trivial and not as fun as crafting prompts, we strongly recommend taking time to implement these next steps properly with your own dataset.

Databricks Data Intelligence Platform provides state-of-the-art solutions to accelerate your AI and LLM projects, and also simplifies data ingestion and preparation at scale.

In this first section, we will use Databricks documentation from docs.databricks.com.

Download the web pages

To simplify our demo, we will open Databricks documentation sitemap.xml and download all the HTML pages in parallel, saving them to a bronze Delta Table in Unity Catalog:

# Download Databricks documentation to a DataFrame

doc_articles = download_databricks_documentation_articles()

# Write the full pages into a Delta tabledoc_articles.write.mode('overwrite').saveAsTable("raw_documentation")Splitting the HTML page into small text chunks

Some of our documentation pages can be fairly big. This will be too long for our prompt as we won't be able to use multiple documents as RAG context (they would exceed our max input size). Some recent studies also suggest that a bigger window size isn't always better, as the LLMs seem to focus on the beginning and end of your prompt.

Finding the right document size depends of your final model capability (max window size), your documents, and the potential cost/latencies induced. Ultimately, this remains a hyperparameter you have to tune based on your documents (e.g. is it better to inject the 10 closest chunks or 2 bigger ones)

LLM Window size and Tokenizer

The same sentence might return different tokens for different models. LLMs are shipped with a Tokenizer that you can use to count tokens for a given sentence (usually more than the number of words) (see Hugging Face documentation or OpenAI)

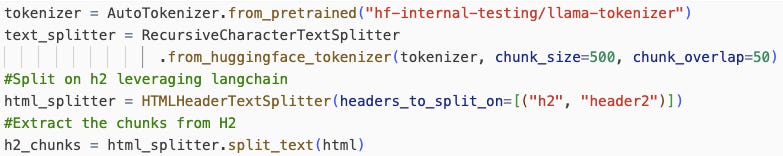

In our case, we'll split these articles between HTML `h2` tags, remove HTML, and ensure that each chunk is less than 500 tokens using LangChain. This will also keep our document token size below our embedding max size (1024 tokens for GBE).

Make sure the tokenizer you'll be using matches the one used in your model.

Remember that the following steps are specific to your dataset. This is a critical part of building a successful RAG assistant. Always take time to manually review the chunks created, ensuring that they make sense and contain relevant information.

All we have to do is wrap our tokenizer in a UDF split_chunks and create our final table with the chunks. The table will then automatically be created,

A note on unstructured documents (pdf, docx…)

It’s also easy to process your documents in an incremental way. Your incoming files can be saved within a volume in Unity Catalog, which will automatically trigger a job to extract the text and create the chunks. Here is how you can do it:

The unstructured library makes it easy to extract text from our docs, using the partition function:

def extract_doc_text(x : bytes) -> str:

return "\n".join(partition(file=io.BytesIO(x))) It’s then easy to create smaller chunks from the full document in a pandas UDF. A good solution is to use llama-index SentenceSplitter to split based on full sentences:

#First set the tokenizer to llama2set_global_tokenizer(AutoTokenizer.from_pretrained("hf-internal-testing/llama-tokenizer"))

#create the splitter from llama_indexsplitter = SentenceSplitter(chunk_size=500, chunk_overlap=50)def split_text_on_sentences(txt) nodes = splitter.get_nodes_from_documents( [Document(text=txt)]) return [n.text for n in nodes]Data preparation: conclusion

We introduced RAG and explored how we can easily ingest and prepare your knowledge base as a Delta Table, creating smaller chunks that we’ll use to augment our chatbot prompt.

This is a crucial step in making your RAG application behave properly, and you definitely want to spend some time making sure the chunks you have all make sense, potentially introducing extra steps.

What’s next?

In our next post, we’ll cover how to add a Vector Search on top of your Delta table. The vector search will be computing and indexing embeddings (vectors representing each chunk). Once the Delta Table is indexed, Databricks will provide a simple way to search the most related chunks to answer any given question, in real-time!

Subscribe now to make sure you won’t miss the next series!