Revolutionizing Data Management: GenAI's Impact on Modern Data Platforms and Lakehouses

Introducing the Data Intelligence Platform and Databricks GenAI capabilities. Join us this December for a deep dive into AI & LLMs, with a step-by-step guide to implementing your own AI Assistant.

The rise of (Gen)AI & Lakehouses

In 2009, Apache Spark™ was conceived within the UC Berkley research lab to build a recommender model for the Netflix Prize ($1M to provide the best video recommendation). A few years later, Databricks was born: the Data + AI platform, surfing on 3 major trends:

Open Source system (Spark, Delta Lake, MLflow…)

Cloud-based

AI, with the belief that AI will ultimately be part of everything

Over the 2010s, most enterprise AI was boring. While omnipresent in the industry, the practical applications would often remain edges, optimizing the business with the few extra rentability points required to thrive, but not always central to company data strategies and transformation.

Things changed in late 2022 with the release of GPT and the world realizing that AI will actually eat all software. GenAI has then evolved exponentially, with LLMs capabilities becoming more and more powerful, and the applications almost unlimited.

GenAI will disrupt your world

It is not hard to imagine a world where AI-based assistants will be part of our daily lives, professional and personal. New company leaders will emerge from this evolution. They will be the ones mastering the (Gen)AI trend and understanding how to scale their business:

At a purely technological level, building and releasing new products faster. Engineers will be able to do more, faster, and at a lower cost, focusing on the customers and not the tech.

Such leaders will tame LLMs to scale their internal organization and create smarter processes. Scale and growth will be accelerated; biased manual reporting will be a thing of the past and insights will be extracted without humans slowing down the process.

Final products and customer relations will evolve with hyper-personalized content and assistants, smarter applications, and more importantly simpler interfaces.

We strongly believe this will be the fallacy of all industries. Ultimately, leaders will be Data + AI companies.

Modern Data Platforms will be disrupted by AI

Just like any industry, it’s easy to imagine that Lakehouses, and by extension Modern Data Platforms, will be disrupted by LLM.

By definition, disruption is hard to predict, but we can imagine a few of the ingredients required to make this cocktail happen:

Data pipelines are becoming much easier to build, and low-code will become the standard with AI assistants accelerating users (helping to code, debug, and suggest improvements)

Your platform understands your data, including your own verbiage: your end or Quarter is in February, what a Premium customer means.

You can find any data assets (table, notebooks, queries, AI models, dashboard…) using a simple semantic search.

All the engine is becoming smarter, AI-based: data anomaly detections, smart query scheduling to reduce your warehouse idle time, abnormal/slow job execution, data auto-layout, auto-indexes, etc.

Ultimately, end users (analysts) can ask simple questions in plain english, and because the knowledge engine understands all your data and your context, it’ll provide direct answers (text-to-sql), and even dashboards/recommendations (text-to-insights)

This is what we call Data Intelligence Platforms, built on top of your lakehouse foundations.

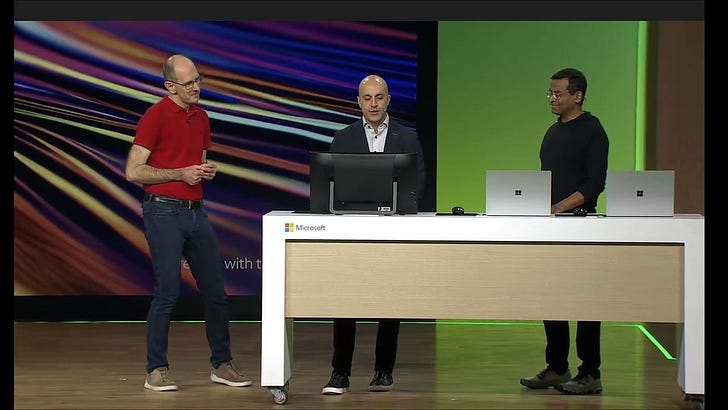

Want to see this in Action? Watch Ali Ghodsi, Databricks CEO, Introducing the Data Intelligence Platform At Microsoft Ignite:

Don’t miss our LLM / AI-assistant deep-dive over December!

As Always with Databricks, major GenAI capabilities are being cooked for you and will be announced very soon. To support that, we will release a series of hands-on posts over December to show you how to build your own Chatbot Assistants, leveraging Foundation Models, step by step.

Don’t forget to subscribe!

Watch Databricks Q4 Roadmap Webinar

LLMs are so exciting, but Databricks is working on major improvements across all the platform, making your Data Project even simpler.

Watch the Databricks Q4 Product Roadmap, covering all the upcoming features (Data Engineering, Databricks SQL, AI, Unity Catalog, Infrastructure…) so you can prioritize your plans!

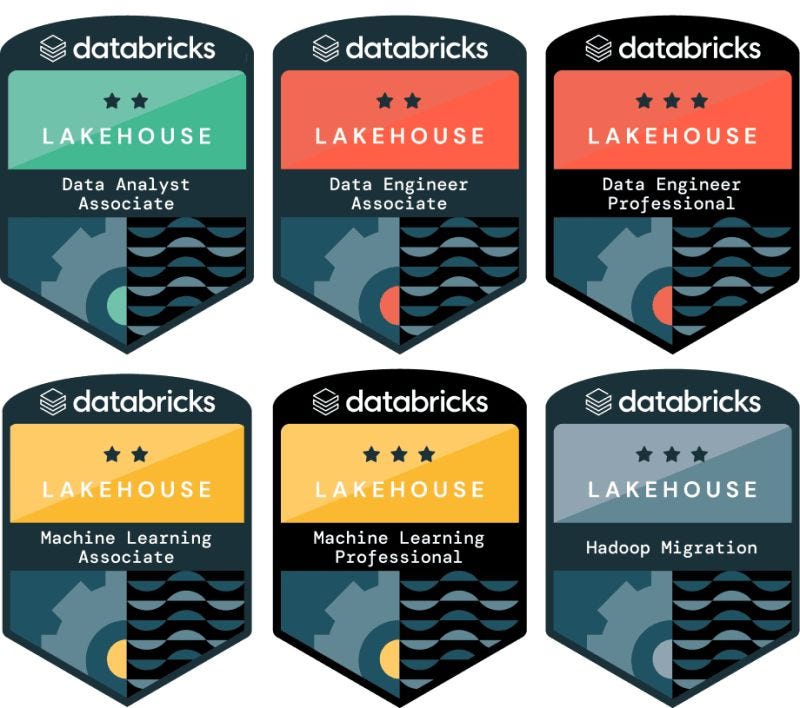

Training for all, and 50% off certifications

Christmas is early with the Databricks Virtual Learning Festival. From 20 Nov to 15 Dec 2023, if you complete a Self-paced Learning plan on Databricks Academy, you will be eligible to receive a unique 50%-off certification voucher.

Here is the list of the courses that are part of the program (see conditions on Databricks Academy website):

Data Engineering with Databricks

Advanced Data Engineering with Databricks

Data Analysis with Databricks SQL

Scalable ML with Apache Spark

ML in Production

AWS, Azure and GCP Databricks Platform Architect

Data and AI Summit CFP is now open!

Do you have a compelling GenerativeAI, data science, or ML story to share with the community? Or maybe you've crafted noteworthy features in open source tech? The Data and AI Summit community wants to hear from you.

Call for Presentations is now open! Submissions end Jan 5.

Other updates

Solutions accelerators are now available in Databricks Marketplace

Databricks Marketplace now gives you access to Databricks Solution Accelerators, purpose-built guides that provide fully functional notebooks, best practices guidance, and sample data to get you set up quickly with many popular use cases on Databricks

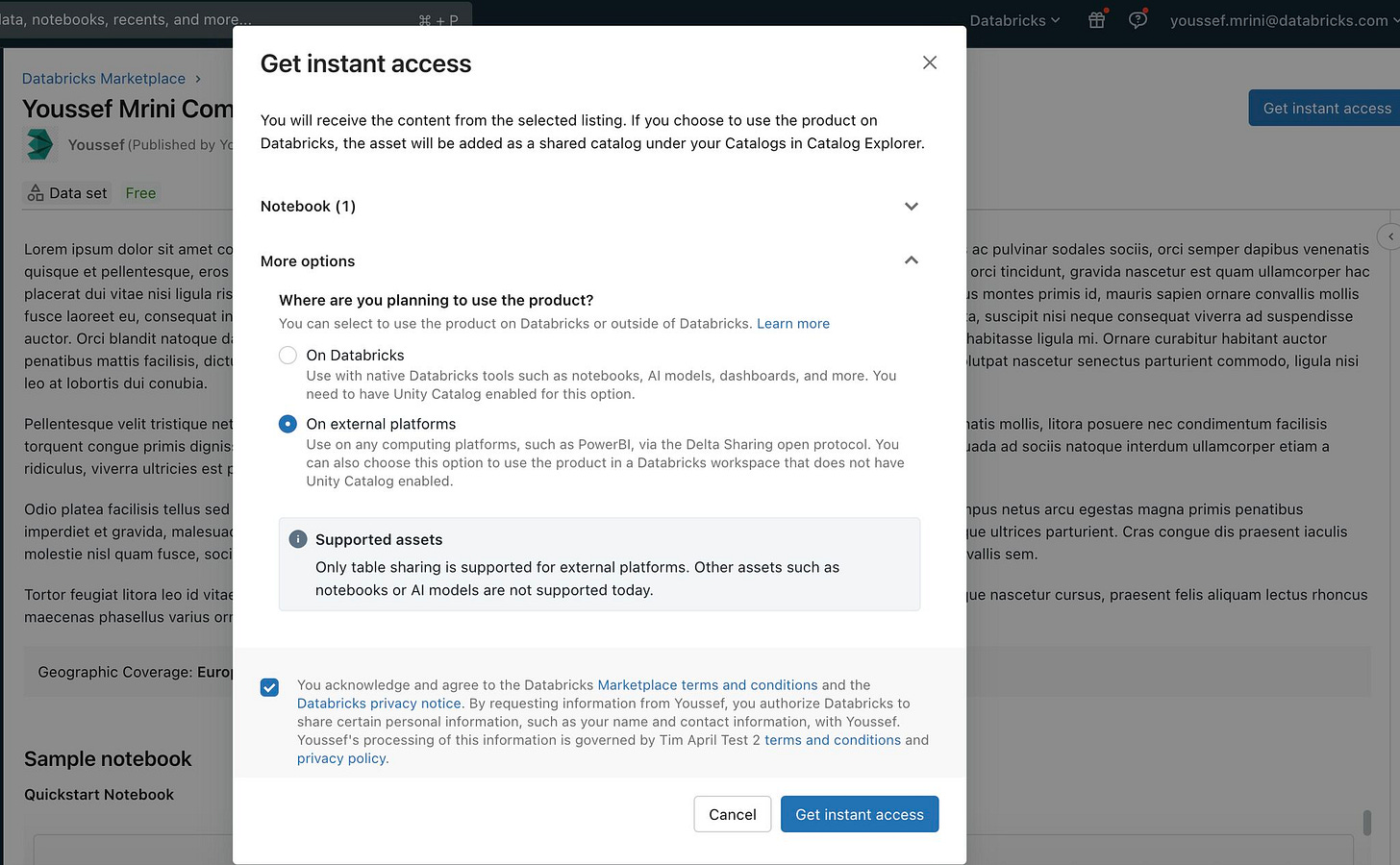

Consume data products in Databricks Marketplace using external platforms

Consumers without a Unity Catalog-enabled Databricks workspace can now access data products in Databricks Marketplace. You can use Delta Sharing open sharing connectors to access Marketplace data using a number of common platforms, including Microsoft Power BI, Microsoft Excel, pandas, Apache Spark, and non-Unity Catalog Databricks workspaces. Read the documentation

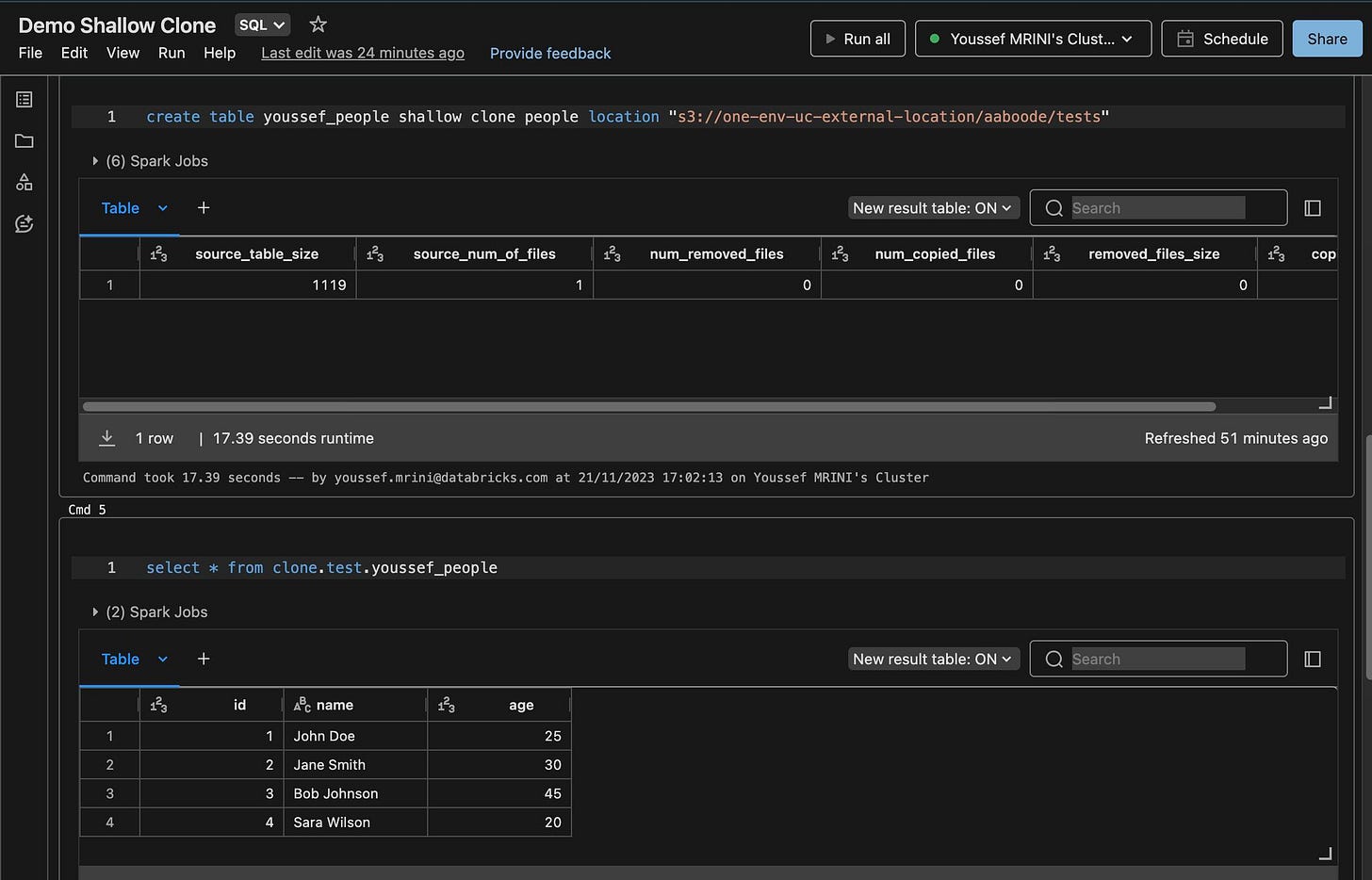

Unity Catalog: Shallow Clone on external tables

Shallow clone removes the need to copy large tables to run experiments without modifying the source table. It’s now available on external tables. Explore the documentation for more details

In a nutshell - the deep stuff

Databricks Runtime 14.2 is GA: Link

Row-level concurrency reduces conflicts between concurrent write operations by detecting changes at the row-level. Row-level concurrency is only supported on tables without partitioning, which includes tables with liquid clustering. Row-level concurrency is enabled by default on Delta tables with deletion vectors enabled. See Write conflicts with row-level concurrency.

Statistics collection is up to 10 times faster on small clusters when running CONVERT TO DELTA or cloning from Iceberg and Parquet tables. See Convert to Delta Lake and Clone Parquet and Iceberg tables.

You can now enable your pipelines to restart when evolved records are detected. Previously, if schema evolution happened with the

from_avroconnector, the new columns would returnnull. See Read and write streaming Avro data.You can now use Scala scalar user-defined functions on clusters configured with shared access mode. See User-defined scalar functions - Scala.

You can now use the

foreachBatch()andStreamingListenerAPIs with Structured Streaming. See Use foreachBatch to write to arbitrary data sinks and Monitoring Structured Streaming queries on Databricks.The Spark scheduler now uses a new disk caching algorithm. The algorithm improves disk usage and partition assignment across nodes, with faster assignment both initially and after cluster scaling events. Stickier cache assignment improves consistency across runs and reduces data moved during rebalancing operations.

Remember: these posts are non-official & only reflects the author’s opinion