What's new in Databricks - April 2024

Data+AI Summit is coming! 500+ sessions on topics ranging from #ML, data science, data engineering, #GenAI, and data governance!

Register now and find your learning path: https://dbricks.co/4cBIag9

Get a $500 discount if you use this code to register for DAIS: SUMCL5VDG

AI, LLM & Data Science

AI is now being used in Governance to Analyze, Clean, and Understand data. Matei Zaharia, Databricks CTO, is discussing his view on the future of Governance + AI and his current work.

Wondering how the industry is leveraging AI within Data Platforms? This interview is for you.

Matei is sharing:

- Market trends and the main challenges he saw discussing with top customers

- How GenAI is unlocking new capabilities within the Data Intelligence Platforms

- Insights on Databricks vision and development around Unity Catalog and GenAI

Databricks released DBRX Instruct, the most powerful open source LLM

Databricks has released DBRX, available in hugging face and as serverless endpoint within your Databricks workspace!

Llama 3 is now available on Databricks

Databricks Model Serving offers instant access to Meta Llama 3 via Foundation Model APIs. These APIs completely remove the hassle of hosting and deploying foundation models while ensuring your data remains secure within Databricks' security perimeter. Learn more

AI Functions

Databricks AI Functions, which are built-in SQL functions that enable the application of AI on data directly from SQL. These functions use the Databricks Foundation Model APIs to perform tasks such as sentiment analysis, classification, translation, and more. Learn more

Apache Ray on Databricks is now GA

Ray is now included as part of the Databricks Machine Learning Runtime (MLR) starting from version 15.0, making it a first-class offering on the platform. This integration allows customers to easily start a Ray cluster without any additional installations. Learn more

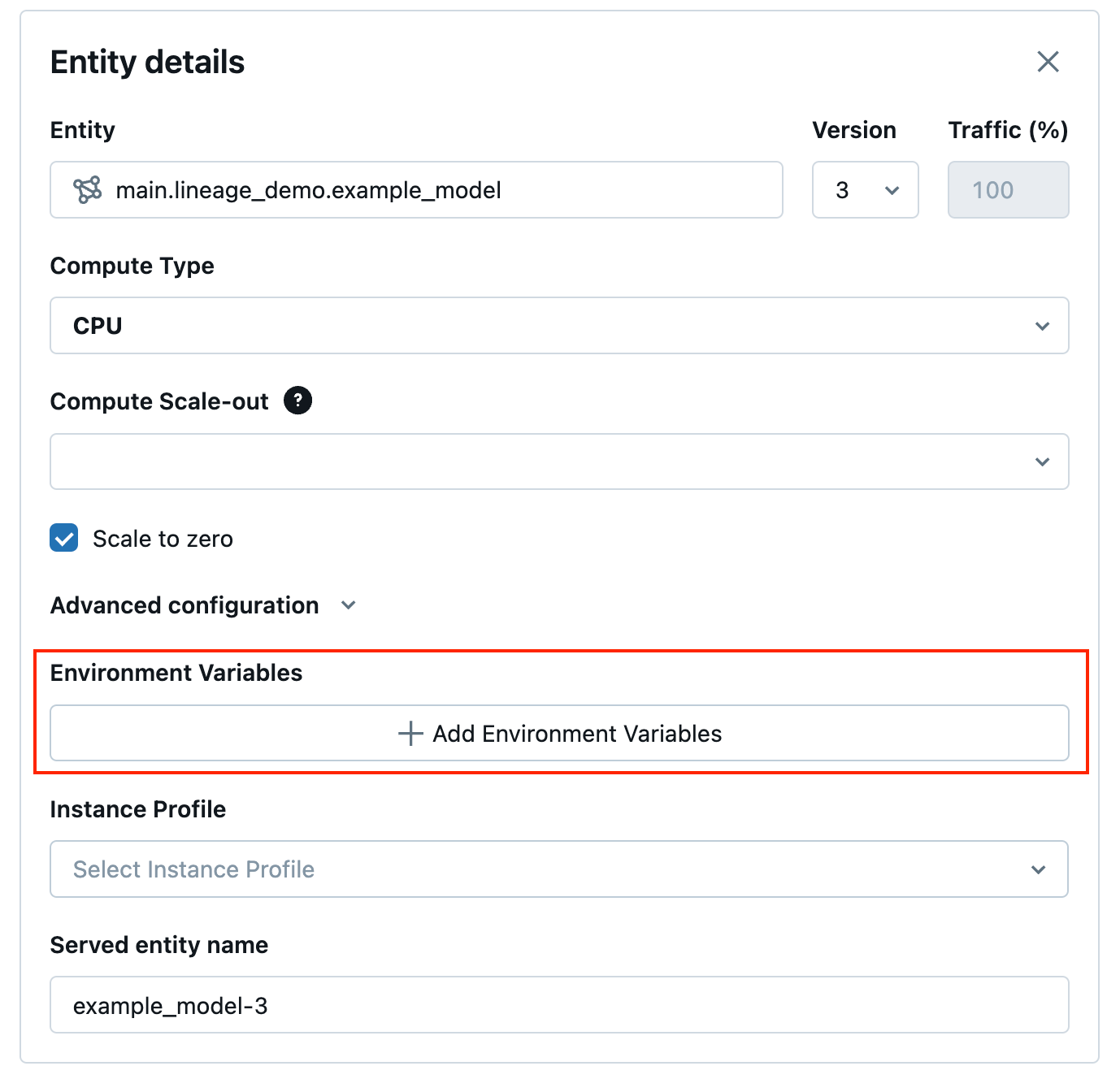

Configuring access to resources from serving endpoints is GA

You can now configure environment variables to access resources outside of your feature serving and model serving endpoints. Learn more

Route optimization is available for serving endpoints

You can now create route-optimized serving endpoints for your model serving or feature serving workflows. Learn more

Get serving endpoint schemas

A serving endpoint query schema is a formal description of the serving endpoint using the standard OpenAPI specification in JSON format. It contains information about the endpoint including the endpoint path, details for querying the endpoint like the request and response body format, and data type for each field. This information can be helpful for reproducibility scenarios or when you need information about the endpoint, but are not the original endpoint creator or owner. Learn more

Data Engineering on Databricks

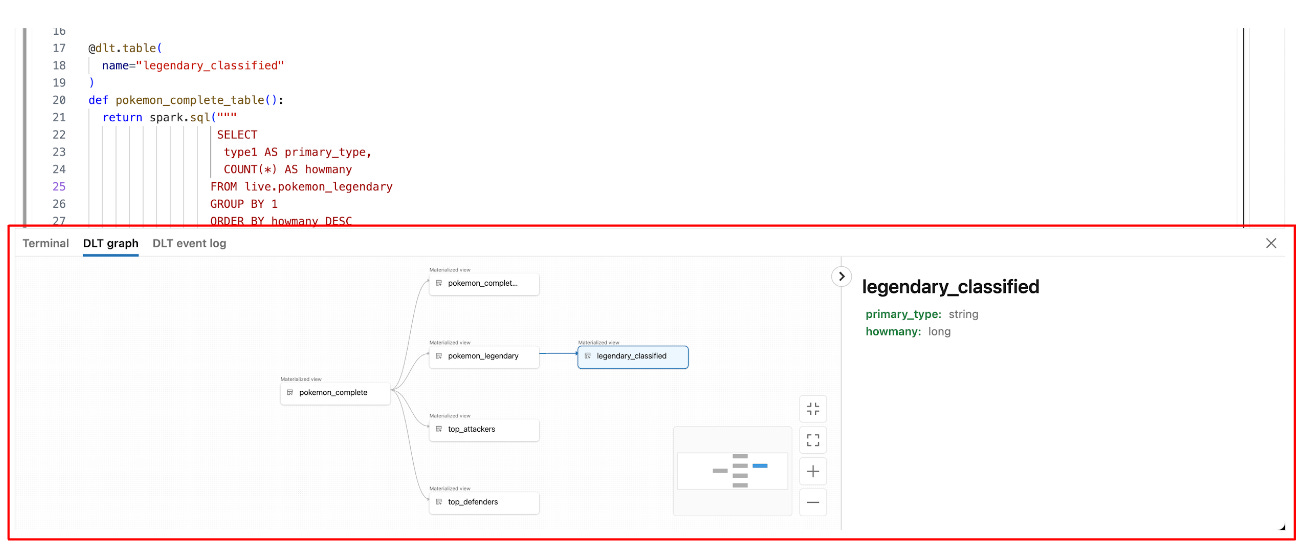

Delta Live Tables notebook developer experience

It’s now getting much easier to build your DLT pipelines within your notebooks! You can see and debug your pipeline right into your notebook! Learn more

Governance & Delta Sharing

Lakehouse federation improvement

Lakehouse Federation is now able to federate foreign tables with case-sensitive identifiers for MySQL, SQL Server, BigQuery, Snowflake, and Postgres connections

New columns added to the billable usage system table

The billable usage system table (system.billing.usage) now includes new columns that help you identify the specific product and features associated with the usage. Learn more

After Deletion vectors, Delta Sharing now supports column mapping

Delta Sharing now supports the sharing of tables that use column mapping. Recipients can read tables that use column mapping using a SQL warehouse, a cluster running Databricks Runtime 14.1 or above, or compute that is running open source delta-sharing-spark 3.1 or above.

Platform admin

Jobs created through the UI are now queued by default

Queueing of job runs is now automatically enabled when a job is created in the Databricks Jobs UI. When queueing is enabled, and a concurrency limit is reached, job runs are placed in a queue until capacity is available

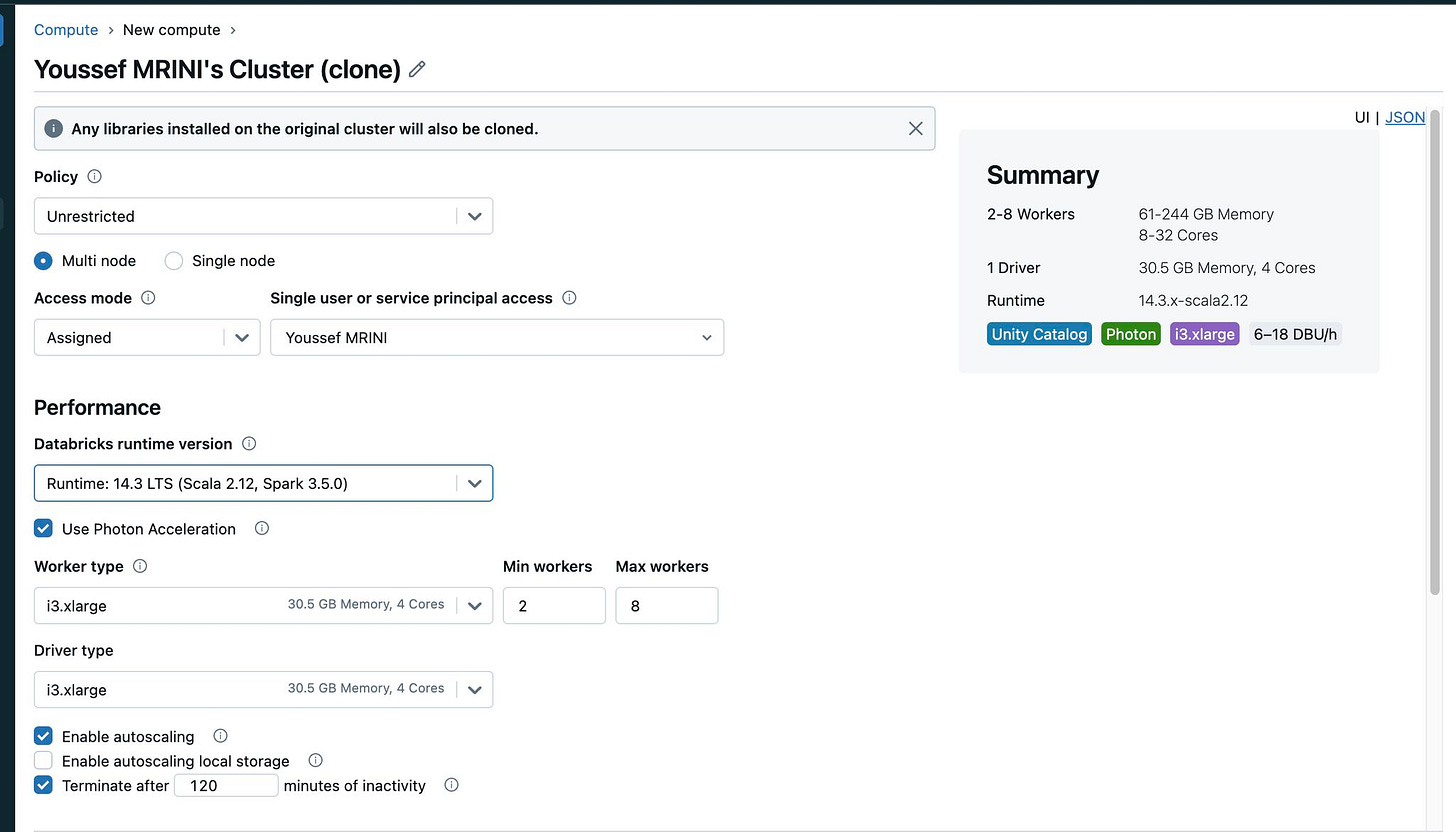

Compute cloning

When cloning compute, any libraries installed on the original compute will also be cloned. For cases where this behavior is unwanted, there is an alternative Create without libraries button on the compute clone page.

In a nutshell…

In Delta Live Tables cluster upgrades are performed concurrently when triggered by pipeline setting changes. Previously, a sequential process was used for these cluster upgrades, causing unnecessary downtime for some pipelines.