What's new in Databricks - Decembre 2024

December 2024 Release Highlights

Add budget policies to model serving endpoints

Unity Catalog can now federate to hive metastores and AWS Glue

DBR 16.1 is now GA

GenAI

Mosaic AI Model Training Serverless forecasting

Mosaic AI model training forecasting improves upon the existing AutoML forecasting experience with managed Serverless compute. Learn more

Streamline AI Agent evaluation using synthetic evaluation sets

Evaluate your AI agent by generating a representative evaluation set from your documents. The synthetic generation API is tightly integrated with Agent evaluation allowing you to quickly evaluate and improve the quality of your agent’s responses without going through the costly process of human labeling. Learn more

Meta Llama 3.3 70B instruct is now available on Model Serving

Improvements in AI_QUERY

AI_Query now supports the responseFormat field for structured outputs. You can use responseFormat in your ai_query requests to specify the response format you want the model you are querying to follow.

Python code executor for AI Agents

You can now quickly give your AI agents the ability to run Python code. Databricks now offers a pre-built Unity Catalog function that can be used by an AI agent as a tool to expand their capabilities beyond language generation. Learn more

Governance

New manage privilege

You can now grant users the MANAGE privilege on Unity Catalog securable objects. The MANAGE privilege allows users to perform key actions on a Unity Catalog object, including:

Managing privileges

Dropping the object

Renaming the object

Transferring ownership

Remove metastore level storage to enforce catalog level storage isolation

If you have a metastore level storage for managed tables/volumes and you want to enforce data storage isolation at the catalog or schema level you can now do it without interrupting existing workloads

Unity Catalog now federate to hive Metastores and AWS Glue

You can now use Unity Catalog to access and govern data that is registered in a Hive metastore. This includes externally-managed Hive metastores, AWS Glue, and legacy internal Databricks Hive Hive metastores.

Network access events is now available

This table logs an event whenever internet access is denied from your account. To access the table, admins must have the access system schema.

Lakehouse federation now supports Oracle

Assign compute resources to groups

The new dedicated access mode previously Single user allows you to assign a dedicated all purpose compute to a group or single user.

Data Engineering

View Streaming Workload metrics for your job runs and DLT tables pipelines

When you view job runs in the Jobs UI/ DLT UI, you can now view metrics such as backlog seconds, byte records and files for source supported by Structured Streaming including Apache Kafka, Kinesis and Autoloader. Learn more

Support for collations in Apache Spark (DBR 16.1+)

You can now assign language-aware, case-insensitive, and access-insensitive collations to STRING columns and expressions. These collations are used in string comparisons, sorting, grouping operations, and many string functions

Support for collations in Delta Lake (DBR 16.1+)

You can now define collations for columns when creating or altering a Delta table

LITE mode for vacuum (DBR 16.1+)

You can now use VACUUM table_name LITE to perform a lighter-weight vacuum operation that leverages metadata in the Delta transaction log

Support for parameterizing the use catalog with identifier clause (DBR 16.1+)

the IDENTIFIER clause is supported for the USE CATALOG statement. With this support, you can parameterize the current catalog based on a string variable or parameter marker.

Comment on column support for tables and views (DBR 16.1+)

The COMMENT ON statement supports altering comments for view and table columns.

New SQL functions (DBR 16.1+)

the following new built-in SQL functions are available:

dayname(expr) returns the three-letter English acronym for the day of the week for the given date.

uniform(expr1, expr2 [,seed]) returns a random value with independent and identically distributed values within the specified range of numbers.

randstr(length) returns a random string of

lengthalpha-numeric characters.

Platform

Personal access token validity reduced to two years

The default maximum lifetime for newly created Databricks issued personal access tokens is now set to two years.

Budget policies to model serving endpoints

Budget policies are now supported on model serving endpoints. Budget policies consists of tags that are applied to any serverless compute activity incurred by a user assigned to the policy. The tags are logged in the billing records, allowing to attribute serverless usage to specific budgets.

Monitor and revoke personal access tokens in your account

Account admins can view a token report to monitor and revoke PAT in the account console. Learn more

Manage serverless outbound network connections with serverless egress control

Serverless egress control lets you restrict outbound access to specified internet destinations

Serverless egress control strengthens your security posture by allowing you to manage outbound connections from your serverless workloads, reducing the risk of data exfiltration.

Using network policies, you can:

Enforce deny-by-default posture: Control outbound access with granular precision by enabling a deny-by-default policy for internet, cloud storage, and Databricks API connections.

Simplify management: Define a consistent egress control posture for all your serverless workloads across multiple serverless products.

Easily manage at scale: Centrally manage your posture across multiple workspaces and enforce a default policy for your Databricks account.

Safely rollout policies: Mitigate risk by evaluating the effects of any new policy in log-only mode before full enforcement.

Unified login is now enabled on accounts without SSO

Unified login allows you to manage one single sign-on (SSO) configuration in your account to use for the account and Databricks workspaces. If you have not already configured account-level or workspace-level SSO in Databricks, unified login is now enabled for all of your workspaces and cannot be disabled

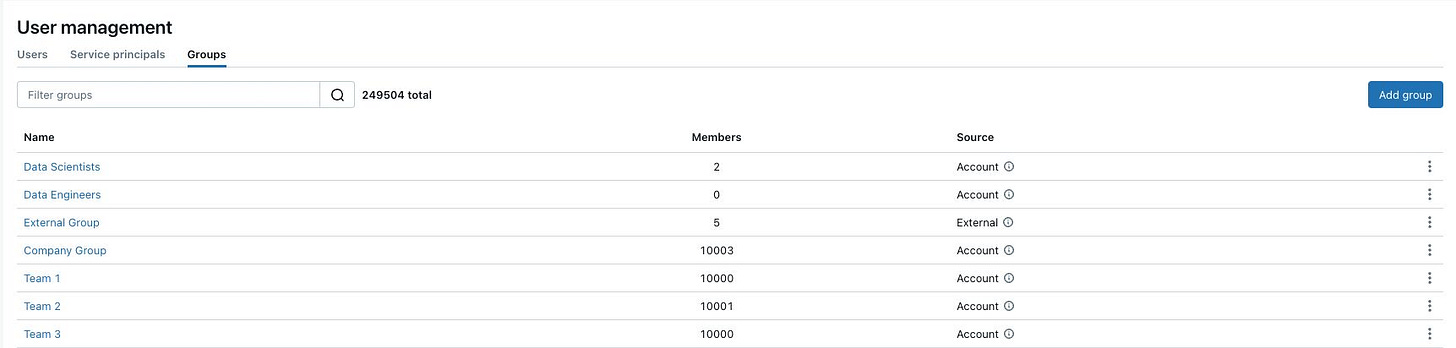

External groups are now labeled and immutable

External groups are groups that are created in Databricks from your identity provider. These groups are created using a SCIM provisioning connector and stay in sync with your identity provider. External groups are now explicitly labeled as External and can no longer be updated from the Databricks account console or workspace admin settings page by default. To update external group membership from the Databricks UI, an account admin can disable Immutable external groups in the account console preview page.

The default format for new notebooks is now IPYNB

The default format for new notebooks you create in your Databricks workspace is now IPYNB (.ipynb). Previously, the default format for notebooks was Source (.py, .sql, .scala, .r). To change the default format, use the Default file format for notebooks setting in the Developer pane of your workspace user settings

AI/BI

AI/BI dashboards

The dataset editor includes a Schema tab that displays the fields and associated comments for the composed dataset.

Creating a new Genie space from a dashboard now applies the dashboard’s filter context in the linked space.

Dashboards can now link to an existing Genie space. See Enable a Genie space from your dashboard.

Dashboard schedules and subscriptions can now be managed using APIs.

Fixed an issue where selecting a null value reset all widget settings in static filters.

You can now align widget titles and descriptions to the left, right, or center of the widget.

Series colors are now less likely to repeat when you apply filters to a dashboard.

Heatmap labels are now placed correctly when temporal fields are used on the axes.

Chart legends displayed at the bottom of a widget now use ellipses to indicate truncated text.

Map legend labels now allocate sufficient width to avoid ellipses where possible.

Rendering maps of Japan in PDF subscriptions is no longer supported.

Fixed legacy table migration bugs.

AI/BI Genie

Audit logs now include entries for AI/BI Genie events

Visualizations generated in a Genie space can now be vertically resized.

Generated visualizations in a Genie space are now editable.

Readability improvements for Genie spaces in dark mode.

Browser tabs open to Genie spaces now display the workspace name alongside Genie.

The Genie listing page now includes buttons to filter by Favorites, Popular, Last modified, and Owner.

The 1,000-space limit per workspace for Genie has been removed.

Fixed an issue where adding comments to a request for review has been fixed.

A reminder to review the accuracy of Genie responses has been added to the Genie space UI.

The benchmarks UI now has an improved Add benchmark experience.

The benchmarks tab now shows the number of questions that evaluations are based on.

Genie’s ability to differentiate between situations that require

date_subanddate_addfunctions has been improved. For more information about these functions, see date_sub function and date_add (days) function.

Delta Sharing

Test clean rooms with collaborators within the same metastore

You can now test your clean room before full deployment by adding a collaborator from within the same metastore. Learn more