What's new in Databricks - March 2024

AI, LLM & Data Science

Databricks released DBRX Instruct, the most powerful open source LLM

Databricks has released DBRX, available in hugging face and as serverless endpoint within your Databricks workspace!

DBRX leverages the Mixture of Expert (MoE) architecture. The model behaves like an ensemble of smaller models, and only a subset is activated for each answer. This reduces cost and increases inference speed, up to 150 tokens/sec!

As a result, DBRX handily beats open source models, such as LLaMA2-70B, Mixtral, and Grok-1 on language understanding, programming, math, and logic.

DBRX also beats GPT-3.5 on most benchmarks:

Databricks Mosaic ML was used for the training, drastically reducing the training cost while offering astonishing performances. This showcase Databricks expertise, making custom model training and fine-tuning accessible to all business.

DBRX Base and DBRX Instruct are now available in model serving. Learn more - Implement your Chatbot with DBRX

Realtime query and transformation with Feature Serving

Databricks online tables already let you deploy realtime tables, providing ms query lookup for your model features.

However, some feature requires live transformation (e.g. distance between the user and a location). Databricks Feature Serving simplifies such transformation. Such features are automatically fetched from online tables and transformed live through Feature Serving endpoints. Like Databricks Model Serving endpoints, Feature Serving endpoints automatically scale to adjust to real-time traffic and provide a high-availability, low-latency service at any scale. Learn more

Mlflow now enforces quota limits

MLflow now imposes a quota limit on the number of total parameters, tags, and metric steps for all existing and new runs, and the number of total runs for all existing and new experiments. Learn more

Google Cloud vertex AI is supported for external models

Databricks Model Serving lets you define and proxy external models from all major cloud providers. This simplifies your governance, adding security and tracking on top of any external model. Google Could Vertex AI is now available!

Data Engineering on Databricks

Delta Live Tables + Feature Store

You can now use any Delta Live Table in Unity Catalog with a Primary key as a feature table for model training or inference. This unifies further the Data Intelligence Platform! Learn more

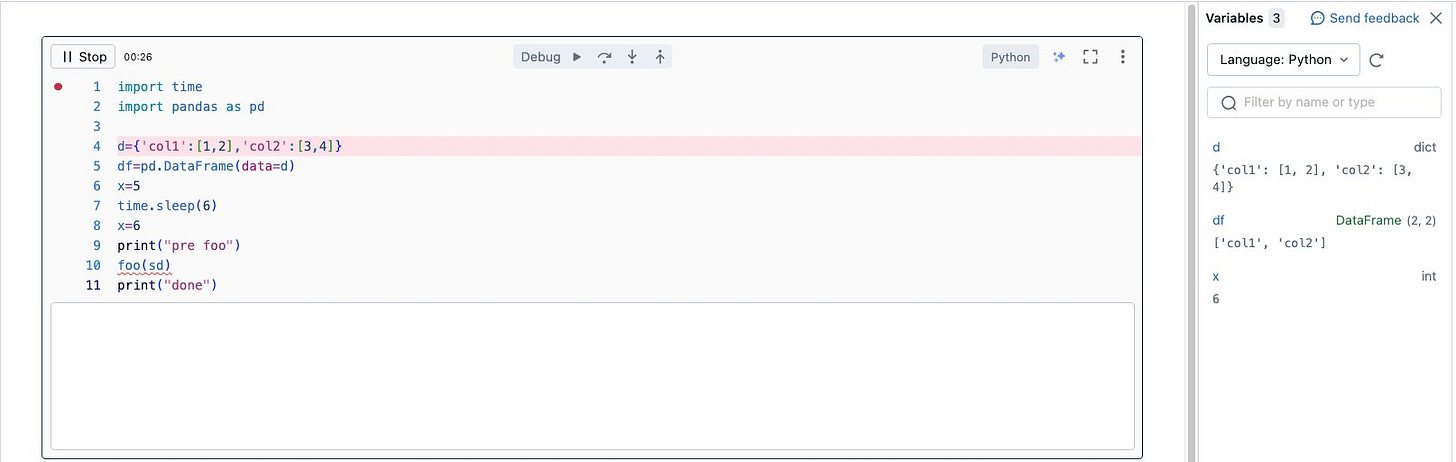

Interactive Notebook debugging

Probably one of the most underrated feature! Databricks now supports interactive Python debugging in the notebook. You can debug step by step through the code line by line and view variable values to fix the errors. Learn more

Governance & Unity Catalog

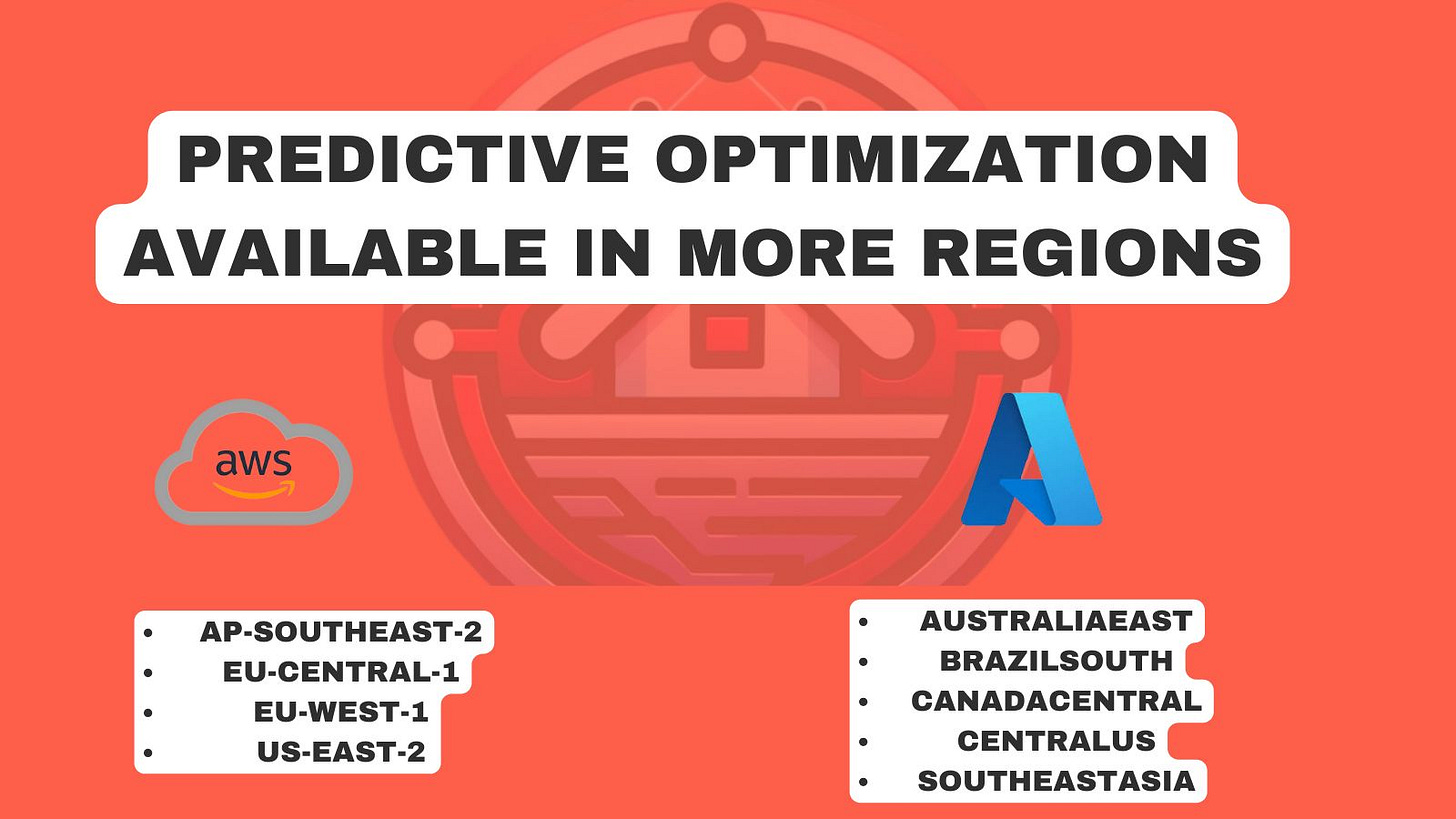

More regions for predictive optimization

Predictive optimization simplifies your data management, leveraging AI to accelerate queries and reduce storage costs! Turn it on and let the engine pick the best optimizations for you!

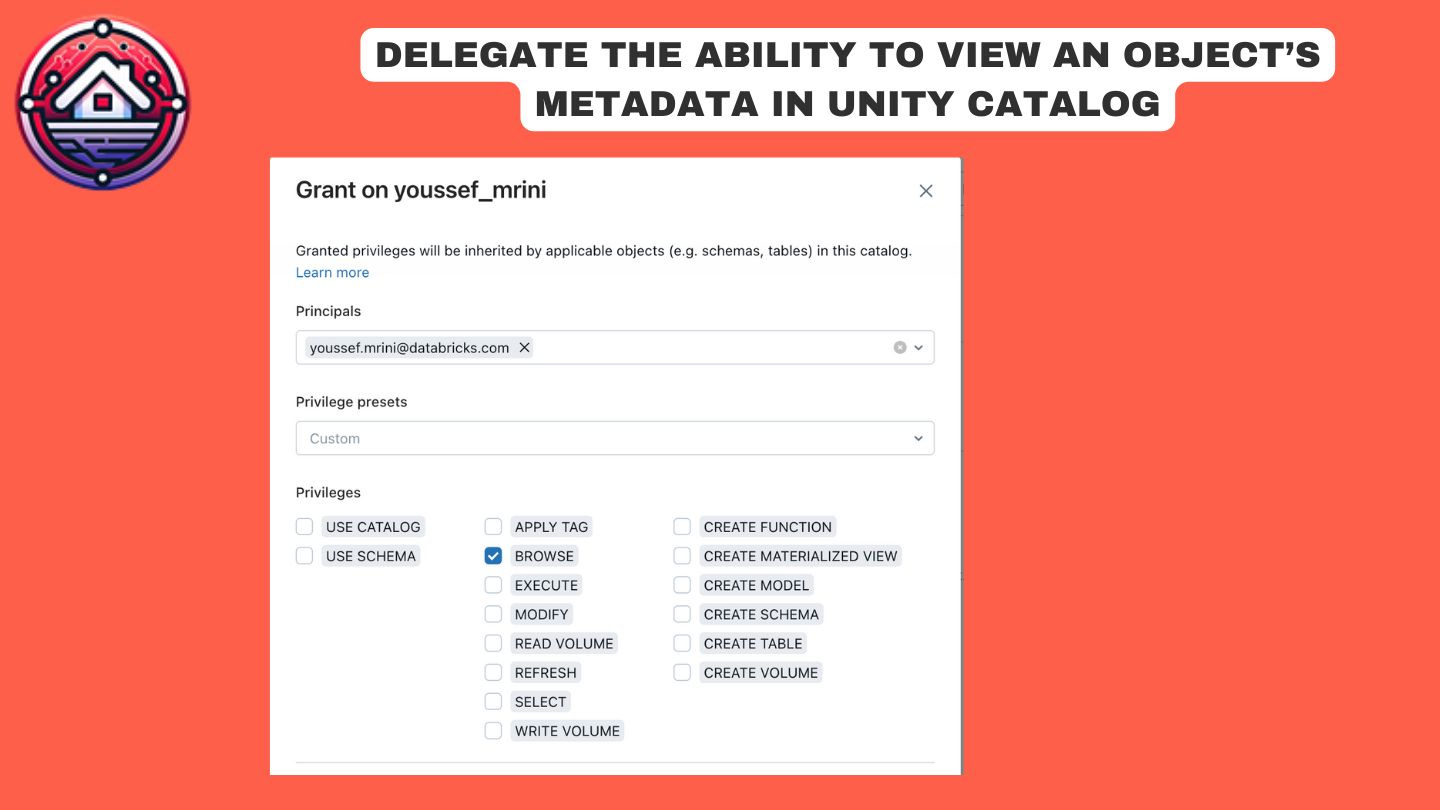

New Unity Catalog permission: BROWSE

You can now grant users, service principals, and account groups permission to view a Unity Catalog object’s metadata using the new BROWSE privilege. This enables users to discover data without having read access to the data. A user can view an object’s metadata using Catalog Explorer, the schema browser, search results, the lineage graph, information_schema, and the REST API. Learn more

Dev Experience & Platform admin

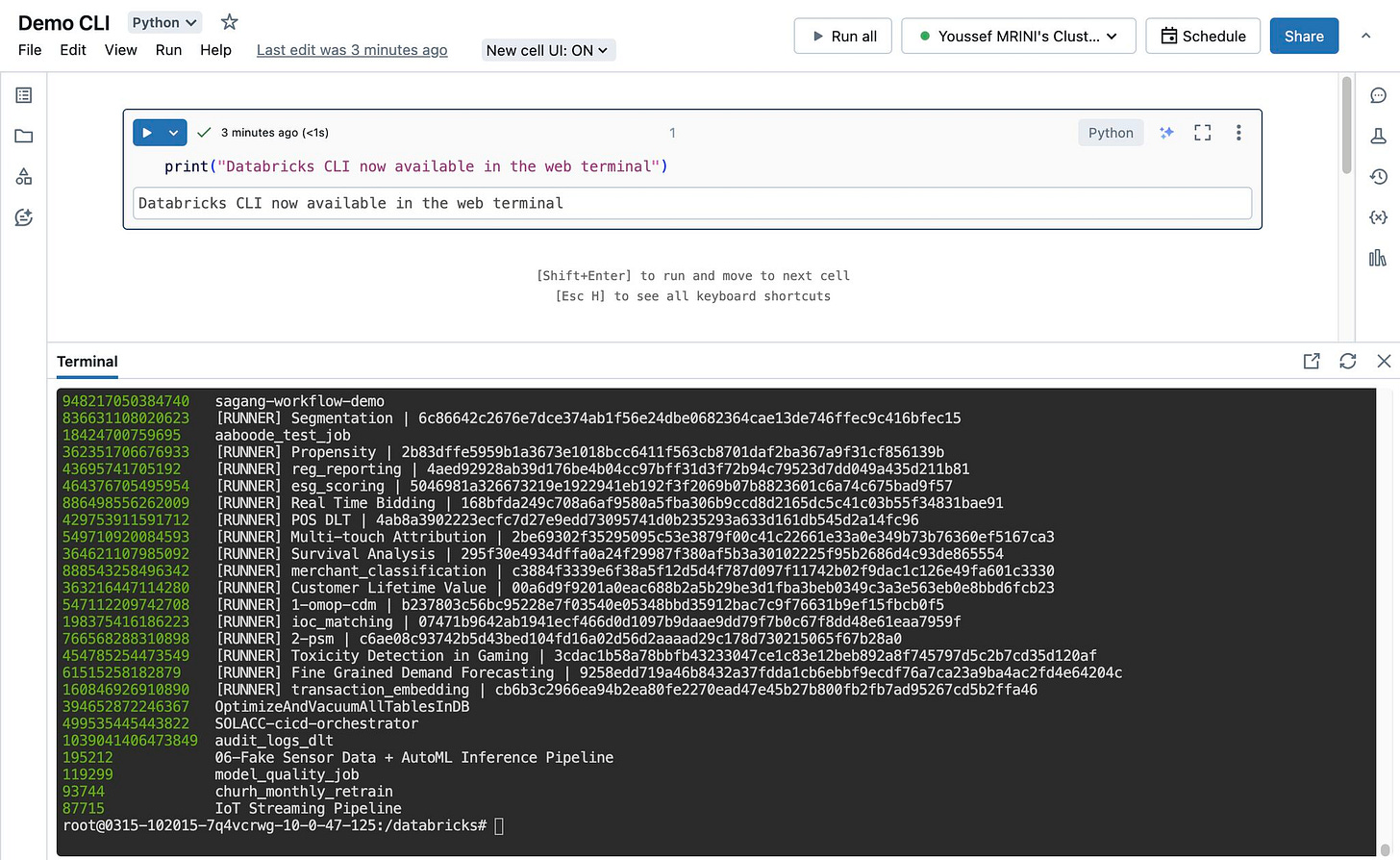

Databricks CLI is now available in the web terminal

Using the Databricks CLI is now super easy, just run Databricks and the CLI will be setup for you, no need to setup the key every time!

Cluster libraries now support requirements.txt file

You can now install cluster libraries using a requirements.txt file stored in a workspace file or Unity Catalog volume.

In Single user and Shared access mode clusters, the requirements.txt file can reference other files.

In a nutshell…

You can now restrict changing a job owner and the run as setting

You can now restrict a personal access token for a service principal

JDK 11 is removed from the DBR 15+. Databricks recommends upgrading to JDK 17

Access resources from serving endpoints using instance profiles is GA

Model Serving is HIPAA compliant in all regions

Provisioned throughput in Foundation Model APIs is GA and HIPAA compliant