What's new in Databricks - March 2025

March 2025 Release Highlights

Automatic Liquid clustering is now public preview

Serverless Compute can now use instance profiles for data access

Write procedural SQL scripts based on ANSI SQL/PSM

Data Engineering

ServiceNow Lakeflow Connector is now available

You can now ingest from ServiceNow using Databricks Lakeflow Connect

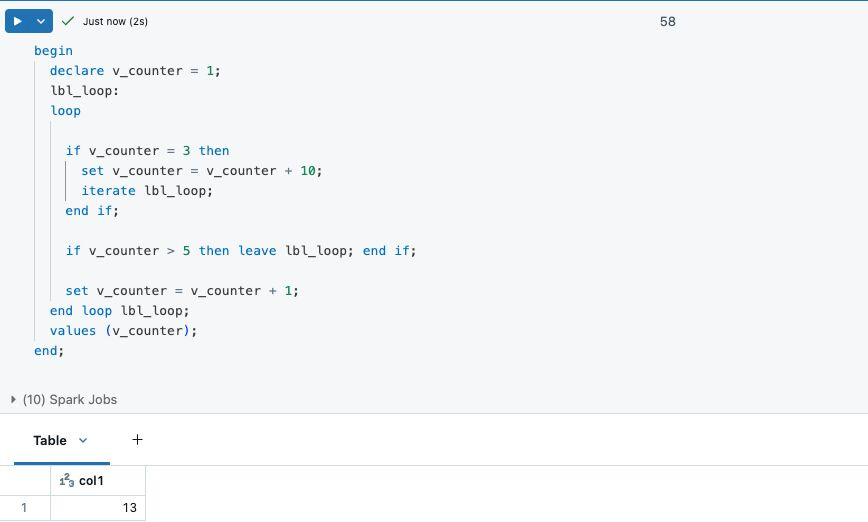

Write procedural SQL scripts based on ANSI SQL/PSM

You can now use scripting capabilities based on ANSI SQL/PSM to write procedural logic with SQL, including control flow statements, local variables, and exception handling. Documentation.

Table and view level default collation

You can now specify a default collation for tables and views. This simplifies the creation of table and views where all or most columns share the same collation. Documentation

Support for transformWithStateInPandas on standard compute

You can now use transformWithStateInPandas on compute configured with standard access mode.

Support for appending data to fine-grained access controlled tables on dedicated compute

In DBR16.3+ dedicated compute supports appending to Unity Catalog objects that use fine-grained access control.

Enhanced support for self-joins with fine-grained access controlled objects on dedicated compute

In DBR 16.3+ the data filtering functionality for fine-grained access control on dedicated compute now automatically synchronizes snapshots between dedicated and serverless compute resources with the exception of materialized views and any views, materialized views, and streaming tables shared using Delta Sharing.

Governance and data sharing

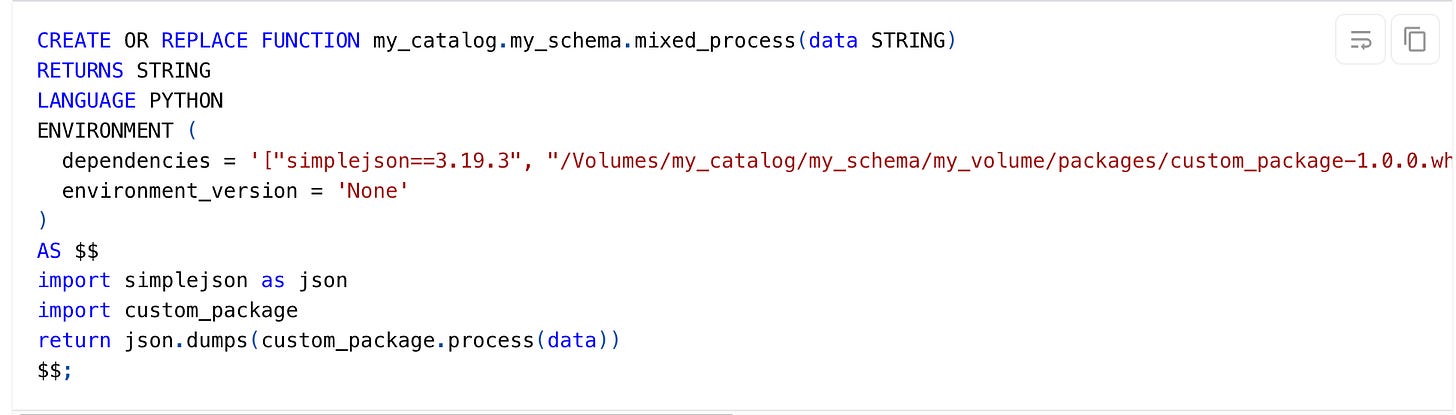

Install custom dependencies in Unity Catalog Python UDFs

You can extend the functionality of Unity Catalog Python UDFs beyond the Databricks Runtime environment by defining custom dependencies for external libraries.

Delta Sharing support for query optimizer statistics is GA

Data recipients accessing shared tables can benefit now fro Optimizer statistics to improve the query performance. The provider should have enabled Predictive Optimization or run Analyze command.

Hive metastore federation is GA

Hive metastore federation enables you to use Unity Catalog to access and govern data that is registered in a Hive metastore. This includes externally-managed Hive metastores, AWS Glue, and legacy internal Databricks Hive Hive metastores.

Bind storage credentials, service credentials and external location to a specific workspace

The ability to bind storage credentials, service credentials, and external locations to specific workspaces is now generally available. Workspace binding prevents access to those objects from other workspaces. This feature is especially helpful if you use workspaces to isolate user data access.

OIDC federation for Databricks to open Delta Sharing

OIDC federation is an alternative to using long-lived Databricks-issued bearer tokens to connect non-Databricks recipients to providers. It enables fine-grained access control, supports MFA, and reduces security risks by eliminating the need for recipients to manage and secure shared credentials.

Databricks-to-open sharing with OIDC federation supports user-to-machine (U2M) authentication for recipients on Power BI and Tableau, and machine-to-machine (M2M) OAuth Client Credentials authentication from Python client apps registered in a recipient's IdP. Documentation

Delta sharing supports renaming tables

Delta Sharing now supports renaming shared tables. If a provider renames a shared table, the table on the recipient side automatically reconciles without erroring

Platform

Databricks Apps: Control access to resources based on user permissions

Databricks Apps can now use on-behalf-of-user authorization to ensure apps interact with resources using the permissions assigned to the app user. You can use on-behalf-of-user authorization when access to resources, such as UC-managed tables or model serving endpoints, should be restricted by the permissions assigned to the user

Budget policies renamed to Serverless budget policies

Monitor and revoke PAT in your account

Token report is now in Public Preview. Token report enables account admins to monitor and revoke personal access tokens (PATs) in the account console. Databricks recommends you use OAuth access tokens instead of PATs for greater security and convenience. Documentation

Download as Excel is supported in Notebooks

For notebooks connected to serverless compute, you can now download tabular cell results as an Excel file. Download as Excel is also supported for notebooks connected to SQL warehouses

Compute logs can be delivered to Unity Catalog Volumes

You can now deliver a compute resource's driver, worker, and event logs to a Unity Catalog volume. Documentation

GenAI & ML

AI Gateway now supports fallbacks for external models:

You can now enable fallbacks on your model serving endpoints using AI Gateway. If your request to an external model fails, you can specify that the request be redirected to other external models served on that endpoint. See Configure AI Gateway using the UI.

Mosaic AI Agent Framework is generally available:

Mosaic AI Agent Framework comprises a set of tools on Databricks designed to help developers build, deploy, and evaluate production-quality agents like Retrieval Augmented Generation (RAG) applications. It is compatible with third-party frameworks like LangChain and LlamaIndex, allowing you to develop with your preferred framework and while leveraging Databricks’ managed Unity Catalog, Agent Evaluation Framework, and other platform benefits.

Quickly iterate on agent development using the following features:

Create AI agents using any agent authoring library and MLflow. Parameterize your agents to experiment and iterate on agent development quickly.

Create agent tools to extend the LLMs capabilities beyond language generation.

Agent tracing lets you log, analyze, and compare traces across your agent code to debug and understand how your agent responds to requests.

Agent Evaluation helps evaluate the quality, cost, and latency of agents to identify quality issues and determine the root cause of those issues.

Improve agent quality using DSPy. DSPy can automate prompt engineering and fine-tuning to improve the quality of your gen AI agents.

Deploy agents to production with native support for token streaming and request/response logging, plus a built-in review app to get user feedback for your agent.

Agent Evaluation Framework: Demo video

Custom Metrics for Evaluating AI Agents on Databricks | MLflow Trace & AI Performance: Demo video

Agent Framework monitoring is in Beta

Agent Framework monitoring is in Beta. You can now set up monitoring on your agents deployed using Mosaic AI agent framework.

AI Agent Monitoring Dashboard: Demo video

Claude Sonnet 3.7 now supported on Mosaic AI Model Serving:

Anthropic's Claude Sonnet 3.7 is now supported on Mosaic AI Model Serving. Claude Sonnet 3.7 is available using:

General purpose AI Function:

ai_query

AI Builder: Information extraction is in Beta

AI Builder provides a simple, no-code approach to build and optimize domain-specific, high-quality AI agent systems for common AI use cases. In Beta, AI Builder supports information extraction and simplifies the process of transforming a large volume of unlabeled text documents into a structured table with extracted information for each document.

AI Gateway now supports pay-per-token endpoints:

Mosaic AI Gateway now supports Foundation Model APIs pay-per-token endpoints.

Perform batch inference using AI Functions (Public Preview):

Mosaic AI now supports batch inference using AI Functions. This functionality is in Public Preview.

Demo video on how to run LLM Batch Inference with ai_query() on Databricks

Example demo video on how to create forecasts with SQL and AI functions on Databricks

External models now supports OpenAI-compatible LLMs:

External models in Mosaic AI Model Serving now supports a custom provider option. You can use this option to specify alternate providers that are not explicitly listed as a model provider or models behind custom proxies that are OpenAI API compatible.

AIBI

AI/BI dashboards

Chart migration fix: Dual-axis charts are now converted as expected when migrating from legacy dashboards to AI/BI dashboards.

Customize dashboard visualization settings by locale: Use the editing panel on your draft dashboard to select a locale and customize settings across all filter and visualization widgets.

Improved URL stability: Importing a new version of a dashboard in draft and publishing no longer breaks filter values saved in existing URLs

Query history update: When viewing the query history, scheduled dashboard update queries now display the dashboard publisher as the user instead of System Service Principal.

Increased custom sorting limits: You can now custom sort up to 500 items in a visualization.

New audit log emitted for dashboard downloads: Downloading dashboards as PDF now triggers the audit log event

triggerDashboardSnapshot.Dashboards are supported as a task type in workflows: Create a job task with the Dashboard task type to refresh dashboard results and optionally send subscription emails.

Calculated measures from dashboards better supported: Genie spaces created from dashboards are now better able to use any calculated measures defined on the dashboard.

Fixed null value styling: Pivot table cells with null values now display with the correct styling.

Sorting with filters now supported: Single-value and multi-value filters now support sorting.

AI/BI Genie

The Genie Conversations API is now in available.. You can now ask questions, retrieve generated SQL and query results, or get the details associated with a Genie space using the Databricks REST API. Documentation.

Improved creation flow: When you create a new Genie space, you need only to add tables to the space to start testing and iterating.

Conversations API update: Messages sent through the Conversations API now appear in the monitoring tab.

New Genie space UI: Genie now has a redesigned UI for authoring and chats. The new layout has more space for conversations and a reorganized interface for adding and refining instructions.

Privileged users can help refine instructions: Users with at least CAN EDIT permissions can now view the source SQL used to generate answers, allowing them to help refine Genie instructions.

Restricted table selection: To return results, users must have at least

SELECTprivileges on the Unity Catalog objects in the space. Authors are now restricted from adding tables where they have insufficient permissions.Improved SQL matching: Genie can now better utilize example SQL statements when user prompts closely match the example SQL.

Easier to understand audit events: The

updateConversationMessageFeedbackaudit event now includes afeedback_ratingfield to quickly see whether a rating is positive or negative.Genie data sampling is now available in Public Preview: This feature improves Genie’s ability to translate user prompts into the right column and row values. To test this feature, contact your Databricks account team.

Genie now self-reflects: As Genie generates SQL, it self-reflects to fix issues and return higher-quality answers. This improves Genie’s ability to author filter conditions and fewer SQL errors.

Improved keyword contextualization: Genie has improved its ability to provide relevant context in its responses based on keywords in the user question.

Improved benchmark evaluation logic: Genie now supports column and row reordering and permits extraneous column selection in SQL results.